Fundamental Principles of Neural Networks: Core Mechanics of Perceptrons, Activation Functions, and Backpropagation

Neural networks can be imagined as a grand orchestra inside a machine. Each neuron is a musician, each weight is a tuning knob, and the data passing through is the sheet music guiding the performance. When tuned correctly, the orchestra produces harmony in the form of accurate predictions and meaningful insights. When not, the result is noise and confusion. Understanding how this orchestra works begins with three central ideas: perceptrons, activation functions, and backpropagation.

The Perceptron: The Smallest Decision Maker

At the most basic level of a neural network sits the perceptron, the simplest form of a neuron. Think of the perceptron like a gatekeeper at a castle door. Various messengers (input features) arrive at the gate, each carrying different levels of importance. The perceptron evaluates each messenger using weights, which represent how much their message matters. If the combined importance crosses a threshold, the gate opens, sending the signal forward.

This small decision-making unit, when connected with thousands of others, can recognize images, translate languages, and even detect emotions. The magic is not in one perceptron, but in the symphony created when they are layered and trained together. This foundational logic is what learners explore while building their first models in an AI course in Pune, where the perceptron becomes the first stepping stone to understanding deeper architectures.

Activation Functions: Bringing Life to the Model

If perceptrons are the decision makers, activation functions are the emotional reactions that influence how strongly those decisions are passed forward. Without activation functions, a neural network behaves like a purely mechanical machine, limited to drawing straight lines through data. But real-world data is rarely linear. It bends, clusters, twists, and spreads in unpredictable ways.

Activation functions introduce the necessary curves. They transform the raw weighted sums into signals that capture complexity. For example:

- Sigmoid smooths outputs into gentle curves, suitable for probabilities.

- ReLU (Rectified Linear Unit) allows strong positive signals to pass while halting negative noise.

- Tanh shapes values around a balanced midpoint, creating symmetry in the output.

Activation functions act like guides, shaping how signals travel across layers. They influence whether a network captures subtle patterns in a medical diagnosis or bold differences in handwriting recognition. They are the emotional expressiveness of the machine, turning cold computation into meaningful transformation.

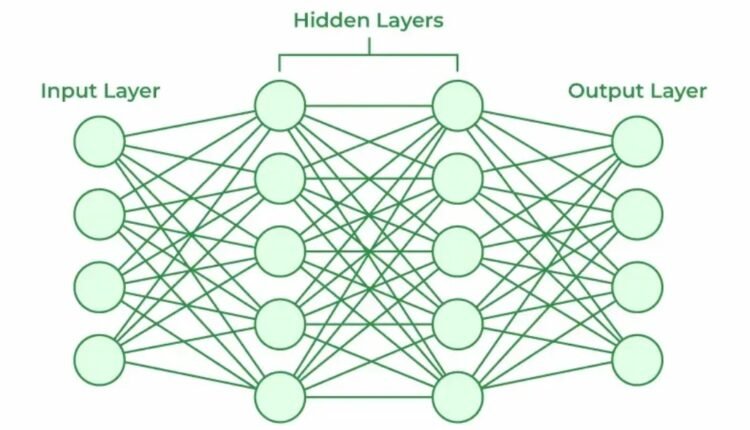

Layering and Learning: The Multi-Layer Network Structure

While a single perceptron is insightful, a true neural network emerges only when perceptrons are stacked in layers. Information flows like water through a series of connected pipes, moving from input to hidden layers to final output. Each layer extracts increasingly abstract features from the raw data, much like how our brain processes visuals.

For example:

- The first layer may detect simple edges in a picture.

- The next layer may detect shapes.

- The deeper layers may detect objects like a face, a car, or a tree.

This layered abstraction is what allows neural networks to understand not just numbers, but meaning. The transformation from simple to complex happens gradually and elegantly as data flows through each layer, learning from mistakes and sharpening its intelligence.

Backpropagation: The Learning Engine

If the perceptron is the gatekeeper and activation functions are the emotional responses, backpropagation is the learning mechanism that keeps improving the entire system. Backpropagation is a feedback loop. After the network makes a prediction, it compares the outcome to the correct answer, measures the error, and then moves backward through the network adjusting weights.

This is similar to a student solving math problems. After seeing the correct solution, the student revisits their steps and corrects the misunderstandings. The difference is that neural networks learn at a remarkable scale, adjusting thousands or millions of weights in milliseconds using gradients and optimization algorithms like gradient descent.

This learning process repeats many times, gradually reducing errors and improving accuracy. Over time, the network becomes finely tuned, like a pianist who has practiced scales until their hands move effortlessly.

Learners who engage deeply with these principles, especially in structured learning environments such as workshops or an AI course in Pune, gain the clarity and confidence required to build real-world machine learning solutions. Understanding backpropagation is often the moment when the black box becomes transparent, and neural networks begin to feel intuitive rather than mysterious.

Conclusion: The Harmony of the Network

Neural networks are far more than stacks of equations. They are organic-like computational systems capable of adapting, evolving, and interpreting the world. The perceptron provides the foundation, activation functions give expressiveness, and backpropagation enables self-improvement.

Just like an orchestra tuning before a grand performance, each component must work in balance. When they do, the result is a powerful model that can classify images, forecast markets, interpret language, and more. Mastering these fundamentals is not just an academic exercise; it is the gateway to building intelligent systems that reshape industries and redefine technological possibility.

Comments are closed.